The fake news phenomenon led to an explosion in media coverage of fact-checking in the final months of 2016. Now academia, with its slower publication process, is catching up.

Since November, studies have failed to replicate the backfire effect and tested the power of corrections on partisan voters in both the United States and France.

In the past few weeks, several studies with interesting findings for fact-checkers were published. Below, I summarize five that caught my eye; to find out more click through to the full studies.

Voters gradually change their opinions when presented the facts. (Seth J. Hill University of California, San Diego, "Learning Together Slowly: Bayesian Learning about Political Facts," The Journal of Politics. Read it here.)

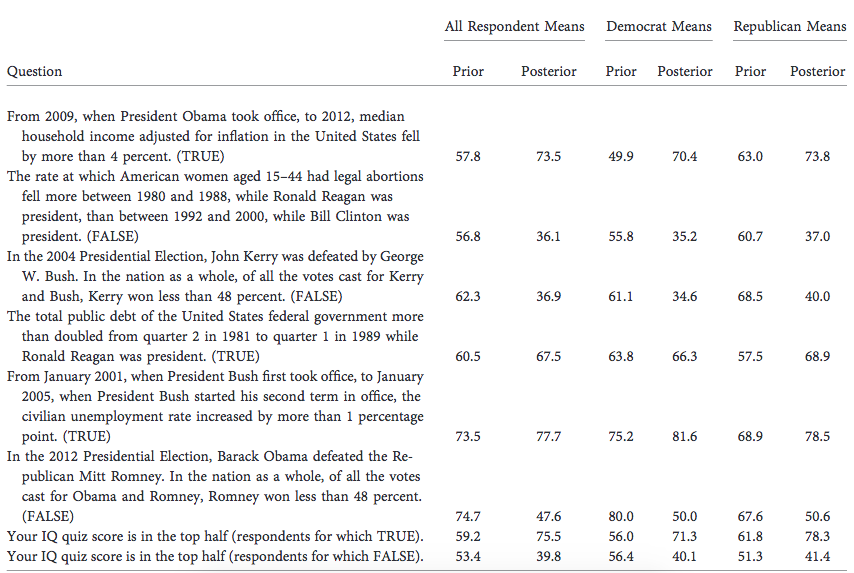

In this study, respondents were given a factual question like "From 2009, when President Obama took office, to 2012, median household income adjusted for inflation in the United States fell by more than 4 percent" and asked to rate it as "True" or "False."

Over the course of four subsequent rounds, they were given signals that the information was indeed accurate or not and told that these signals were right 75% of the time. The results indicate that respondents updated their beliefs towards the correct answer regardless of their partisan preference.

For instance, on the question about media household income under Obama's first term, Republicans were predictably more likely to rate it "True" before any information was presented. But after four rounds of signaling, both Democrats and Republicans rated the claim "True" about 70 percent of the time (see table below).

This progression of factual beliefs is consistent with a cautious application of Bayes' Rule, a theorem about probability cherished, among others, by statistician Nate Silver. The study's elaborate design makes it hard for fact-checkers to draw real life lessons. However, it does seem to offer additional evidence that fact-checking doesn't fall on deaf ears.

Politicians also display motivated reasoning. (Baekgaard, M., Christensen, J., Dahlmann, C., Mathiasen, A., & Petersen, N. (2017). The Role of Evidence in Politics: Motivated Reasoning and Persuasion among Politicians. British Journal of Political Science, 1-24. Read it here.)

954 Danish local politicians were given tables comparing user satisfaction at two different schools, road providers or rehabilitation services. In all cases, one provider was clearly better than the other, if only slightly, with satisfaction rates working out to 84 percent versus 75 percent. In a control group, politicians were capable of spotting the better provider. However, when told that one provider was public and one private, prior attitudes towards privatization of public services kicked in. The study found that politicians who were given information that conformed with their ideology interpreted the information correctly 84-98 percent of the time.

Voters with more information may be less likely to vote along party lines. (Peterson, E. (forthcoming). The Role of the Information Environment in Partisan Voting. The Journal of Politics. Read it here.)

The study offers a glimmer of hope in our hyperpartisan times. Increasing the amount of information available to a voter about two opposing candidates may reduce the likelihood of a vote along party lines.

In a survey-based experiment, respondents were less likely to vote for their co-partisan the more they knew about each candidate (e.g. race, profession, marital status, position on abortion, education and government spending). This lab design may not translate particularly well in real life, which makes the observational study conducted in parallel even more fascinating.

The more a newspaper's readership was concentrated within a congressional district and the higher its share of the total readership in the district, the higher the likelihood of a vote that didn't follow the partisan distribution of the electorate in the period 1982-2004.

The reason offered by the study for this finding is that local newspapers with a strong presence in one district provide additional coverage to "their" race, giving voters more information than partisanship to ground their decisions. The outlook for local journalism has changed dramatically since 2004, but the paper does suggest that the principal misinformation challenge may be partisan echo chambers rather than rejection of facts.

Low critical thinking, not partisan preferences, may determine whether you believe in fake news. (Who Falls for Fake News? The roles of analytic thinking, motivated reasoning, political ideology and bullshit receptivity. Gordon Pennycook & David G. Rand. Not yet published. Read it here.)

In this preregistered but not yet peer-reviewed manuscript, two Yale researchers found a positive correlation between analytical thinking and the capacity to distinguish fake news from real.

Respondents were showed "Facebook-like" posts carrying real or fake news. Across three different study designs, respondents with higher results on a Cognitive Reflection Test (CRT) were found to be less likely to incorrectly rate as accurate a fake news headline. (The test asks questions familiar to readers of Nobel laureate Daniel Kahneman, such as: "A bat and ball cost $1.10 in total. The bat costs $1.00 more than the ball. How much does the ball cost?")

Analytic thinking was associated with more accurate spotting of fake and real news regardless of respondents' political ideology. This would suggest that building critical thinking skills could be an effective instrument against fake news.

One of the studies also found that "removing the sources from the news stories had no effect on perceptions of accuracy," which seems to run counter to the rationale behind recent efforts to increase the visibility of article publishers on social media feeds.

Social media sentiment analysis could offer lessons on building trust in fact-checking. (Trust and Distrust in Online Fact-Checking Services By Petter Bae Brandtzaeg, Asbjørn Følstad Communications of the ACM. Read it here.)

This study evaluated online user perceptions of Factcheck.org, Snopes.com and StopFake.org. Sentences with the phrase "Factcheck.org is" or "Snopes is" were collected from Facebook, Twitter and a selection of discussion forums in the six months from October 2014 to March 2015. Because Facebook crawling is limited to pages with more than 3,500 likes or groups with more than 500 members, the sample was stunted. In the end, 395 posts were coded for Snopes, 130 for StopFake and a mere 80 for Factcheck.org.

Facebook pages for the two U.S. sites have hundreds of thousands of likes, so the findings that a majority of comments were negative ought to be read in light of the sample limitations. Still, the paper's coding of comments along themes of usefulness, ability, benevolence and integrity — and splitting across positive and negative sentiment — offers a template for future analysis.

Getting a global picture of what commenters are saying about active fact-checkers should help suggest new approaches to increase audience trust. For instance, if a majority of critical users accuse a fact-checking project of bias, it could take more steps to enforce and display nonpartisanship.

Did you like this roundup? We will be publishing more summaries of research related to fact-checking, misinformation and cognitive biases. Email us at factchecknet@poynter.org if a study catches your eye.